The aim of learning is to close the knowledge gaps that people have and to ultimately improve their performance. When it comes to eLearning content development, the challenge is how to best present information in such a way that it does not overload the known limits of working memory and allows for transfer into long-term memory. This post will focus on Mayer’s Cognitive Theory of Multimedia Learning (or eLearning as its known now) and some of the resulting principles for designing multimedia instruction that when applied can improve learning delivered via eLearning.

The term multimedia is commonly defined as “any presentation containing words (such as narration or on-screen text) and graphics (such as illustrations, photos, animation or video)”.

3 Assumptions of Cognitive Theory of eLearning

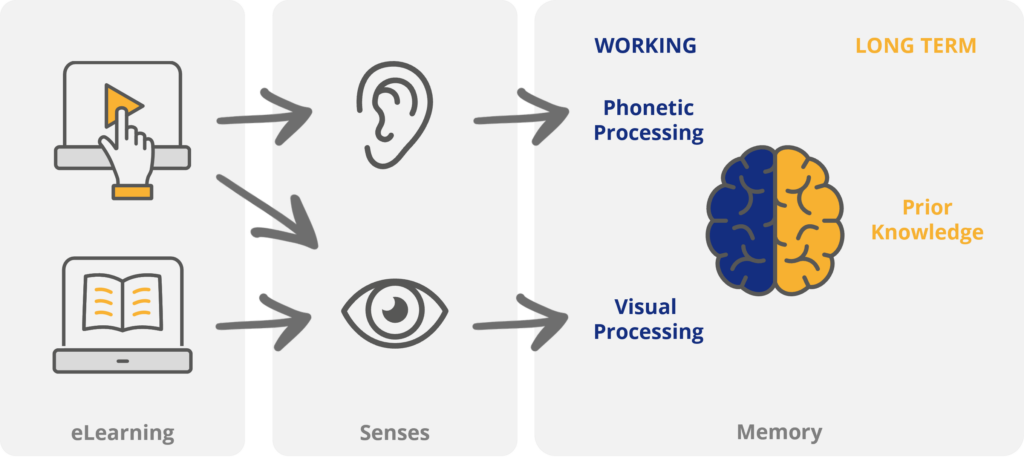

Drawing on the work of Paivio’s Dual Coding Theory, Baddeley’s Working Memory Model and Sweller’s Cognitive Load Theory, Richard Mayer developed a theory specifically for multimedia learning. The Cognitive Theory of Multimedia Learning is based on three assumptions about how the human mind works during multimedia instruction:

- Dual channel – humans possess separate information processing channels for verbal and visual material.

- Limited capacity – the verbal and visual channels can only process a limited amount of information.

- Active processing – learning requires substantial cognitive processing via the verbal and visual channels.

For example, according to the theory, eLearning containing text and video are received by the learner via their sensory memory (ears and eyes). Selected words and video are then processed by the working memory which is then integrated with prior knowledge held in long-term memory.

3 Types of Cognitive Demands

Multimedia presentations have the potential to overload the limited capacity of the working memory in many ways. Mayer and Moreno also highlight three types of cognitive demands placed on learners during multimedia instruction:

- Essential processing – required to make sense of the presented words and images.

- Incidental processing – required to process additional words and or images not related to processing the presented material.

- Representational holding – the cognitive processing required to hold visual or verbal material in working memory for a period of time.

Whilst essential processing is linked to the material being learned, incidental processing and representational holding place additional demands on working memory capacity and can interfere with the learner’s ability to process and transfer the material to their long-term memory. In addition, Clark and Mayer use the term extraneous processing to describe the processing of material not related to the learning goal or caused by poor instructional layout.

4 Principles of Designing eLearning

The cognitive architecture underpinning Mayer’s theory has led to (via numerous studies) the creation of principles for the design on multimedia instruction. These principles create guidelines for instructional designers wanting to create multimedia instruction based on what is known about the processing capabilities of the human memory. Let’s take a look at four of those principles.

Multimedia Principle

According to the multimedia principle, learning is improved when words and pictures are used in multimedia presentations rather than words alone. However, if the image is not directly related to the material being presented and as such does not support the instruction it won’t contribute to learning and will require processing by cognitive resources that are already limited. The use of ‘decorative’ images in eLearning should be avoided and all on-screen object should support the learning.

Contiguity Principle

The contiguity principle states that words and pictures should be integrated rather than separated. Two contiguity effects have been identified by Moreno and Mayer, firstly the spatial-contiguity effect where words and pictures are separated either on the same screen and need to be combined to understand the on-screen content or placed on different screens thereby requiring the learner to move between screens to integrate the material in order to make sense of it. Secondly, there is the temporal-contiguity effect where spoken words are presented before or after on-screen visuals and therefore require learners to hold some information in their working memory and integrate it with other information contained in the presentation. This requires the use of limited cognitive resources to process the information being presented.

To reduce the spatial-contiguity effect, the text should be integrated with the image or positioned close to the part of the image to which it refers. Clark and Mayer identify other examples of where the contiguity effect is violated in multimedia learning, such as, when information is presented in a scrolling panel and the learner must locate some information and integrate it with on-screen images or when a question is asked and the feedback given is placed on a different screen to the question or when links to references appear in a new browser window or where audio narration is followed by a video rather than being presented at the same time. Therefore, when designing eLearning, on-screen objects should be positioned in close proximity to descriptions and if audio narration is used, it should be synchronised with the objects being described.

Modality Principle

According to the modality principle, learning is improved when on-screen text that describes an image is replaced by audio. When only words and pictures are used on-screen, the visual channel can become overloaded. However, replacing on-screen text with audio spreads the cognitive load across both channels reducing the processing demand on a single channel.

Mayer and Moreno refer to the use of audio in this way as ‘offloading’ as some of the cognitive processing of the visual channel is off-loaded to the verbal channel. It should be mentioned that the modality principle has the most research support of any of the design principles and many experiments have reached the same conclusion, that pictures combined with narration results in improved learning over pictures and on-screen text.

Redundancy Principle

Whilst using text and audio to present on-screen information spreads the cognitive processing across processing channels, presenting audio and identical on-screen text results in redundant information being processed by the learner i.e. the same information is being processed by both channels. Studies show that learners who viewed multimedia presentations containing animations and narration outperformed those who viewed the same presentations that contained animation, narration and on-screen text.

Delivering instruction via eLearning is widely used in many organisations and instructional designers should look to apply these evidence based principles of multimedia instruction into their designs in order to improve learning outcomes.

References

Clark, R., & Mayer, R. E. (2008). E-learning and the science of instruction: Proven guidelines for consumers and designers of multimedia learning. John Wiley and Sons Inc.

Mayer, R. E., & Moreno, R. (2003). Nine ways to reduce cognitive load in multimedia learning. Educational Psychologist, 38(1), 43-52.

Moreno, R., & Mayer, R. E. (1999). Cognitive principles of multimedia learning: The role of modality and contiguity. Journal of Educational Psychology, 91(2), 358-368.